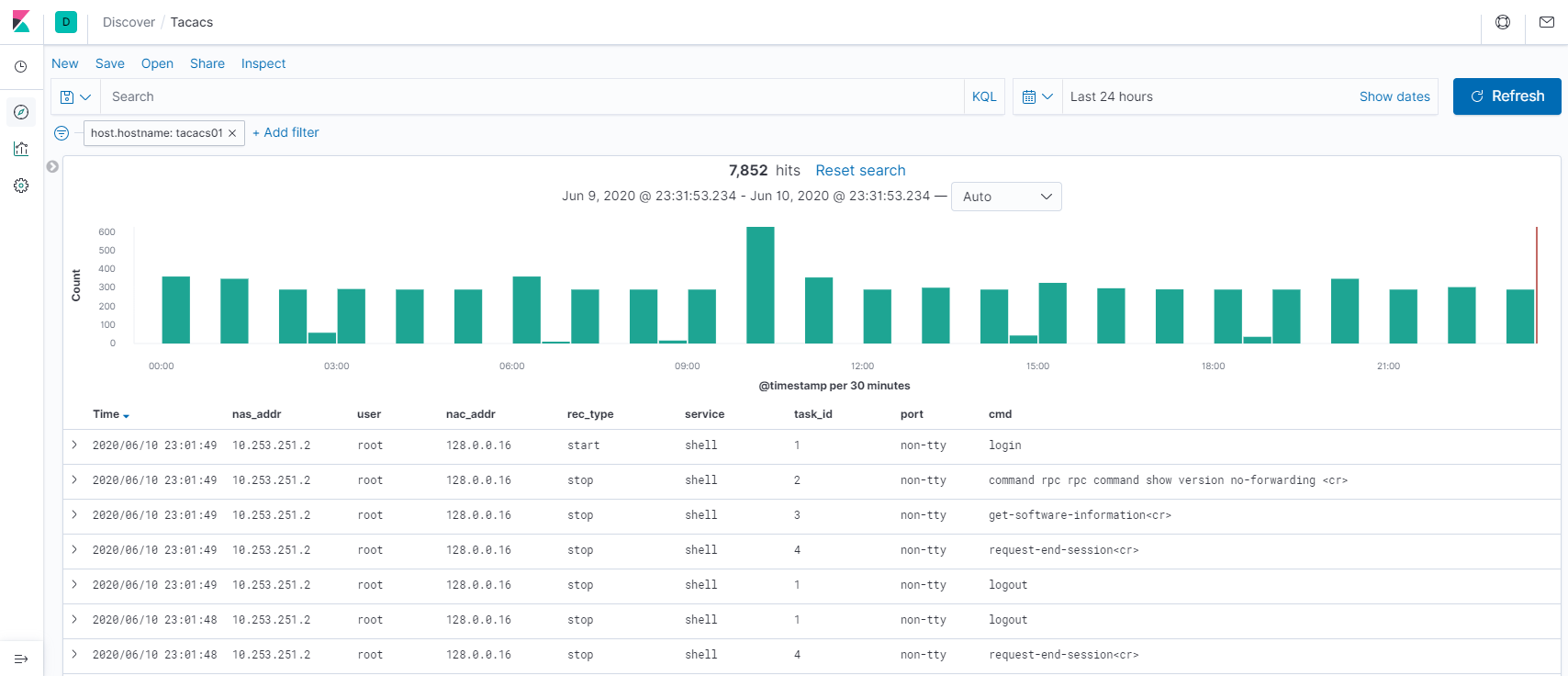

We run a tac_plus TACACS+ server, and ingest the access and accounting data into an ELK stack. Following is a short run-through on parsing the logging data into logstash.

Let’s look at a few logging lines:

# login to a networking device at 10.10.251.20 from 10.10.248.8 by joe.user 2020-06-10 15:05:55 +1000 10.10.251.20 joe.user 10.10.248.8 shell login succeeded # command executed on a Juniper device 2020-06-10 15:06:01 +1000 10.10.251.20 joe.user ttyp1 10.253.10.8 stop task_id=3 service=shell session_pid = 21075 cmd=show interfaces <cr> # command executed on a Cisco device 2020-06-10 06:03:22 +1000 10.10.253.48 joe.user tty2 10.10.253.100 stop task_id=336970 timezone=AEST service=shell start_time=1591733002 priv-lvl=15 cmd=configure terminal <cr>

Taken from http://www.pro-bono-publico.de/projects/tac_plus.html

The following pattern matches the first six tokens:

Accounting records are text lines containing tab-separated fields. The first 6 fields are always the same. These are:

Following these, a variable number of fields are written, depending on the accounting record type. All are of the form attribute=value. There will always be a task_id field.

- timestamp

- NAS address

- username

- port

- NAC address

- record type

NOT_TAB ([^\t]+)

NOT_TAB2 ([^\t]*)

TACACS_DATE %{YEAR:year}-%{MONTHNUM:month}-%{MONTHDAY:day} %{TIME:time} %{ISO8601_TIMEZONE}

TACACS_PREFIX %{TACACS_DATE:tac_timestamp}\t%{NOT_TAB2:nas_addr}\t%{NOT_TAB2:user}\t%{NOT_TAB2:port}\t%{NOT_TAB2:nac_addr}\t%{NOT_TAB2:rec_type}

Then we can use in the following logstash filter:

filter {

if [fields][document_type] == "tacacs" {

kv {

allow_duplicate_values => false

field_split => "\t"

}

grok {

patterns_dir => ["/etc/logstash/conf.d/patterns"]

match => ["message", "%{TACACS_PREFIX}"]

}

date {

match => ["tac_timestamp", "YYYY-MM-dd HH:mm:ss Z"]

}

}

}

We extract all the key=value fields, extract the six fields of the common prefix, and overwrite the date data.